Outcome of the “Best Classification Challenge” of the 2013 IEEE GRSS Data Fusion Contest

More than 50 worldwide teams entered the “Best Classification Challenge” of the 2013 IEEE GRSS Data Fusion Contest with innovative approaches to analyze hyperspectral and LiDAR data.

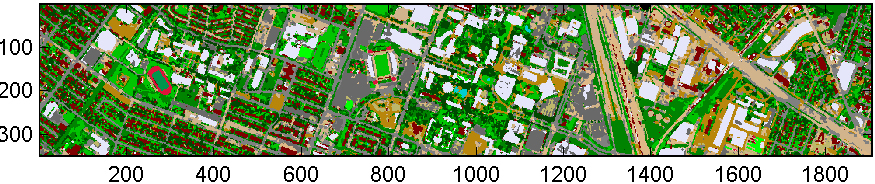

The top-ten results, ranked based on their accuracy, are summarized below along with a brief description of the algorithms and the contact information of the authors. The color scheme for the classification maps is as follows:

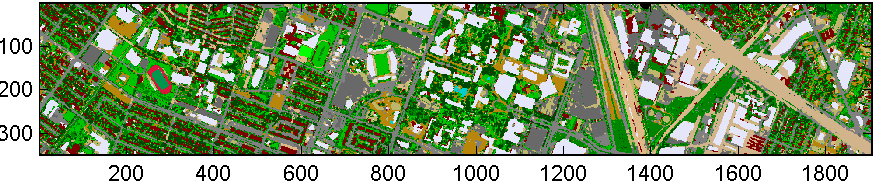

1’st Place

Authors: Christian Debes, Andreas Merentitis, Roel Heremans, Jürgen Hahn, Nick Frangiadakis and Tim van Kasteren

Affiliations: Christian Debes, Andreas Merentitis, Roel Heremans, Nick Frangiadakis and Tim van Kasteren are with AGT International; Jürgen Hahn is with Signal Processing Group at Technische Universität Darmstadt

Contact Email: cdebes@agtinternational.com

Algorithm Description:

1. Preprocessing: In this step we perform automatic detection of shadows in the hyperspectral image. The output is an index of shadow-covered and shadow-free areas; 2. Feature Extraction: Basic substances of the image are derived using a blind hyperspectral imagery unmixing algorithm called Automatic Target Generation Procedure (ATPG). In total fifty substances are automatically extracted and the respective abundance maps are used as features. In addition, we use the raw Lidar data as a feature together with several composite features such as gradients. Similarly, composite features of the hyperspectral data such as vegetation index and water vapour absorption are considered. Finally, regularized Minimum Noise Fraction (MNF) components of the raw hyperspectral data are added, coming to a total of 65 features; 3. Segmentation: A Markov Random Field-based approach is used to segment the image into objects. This allows going from a pixel-based to an object-based classification in which contiguous pixels of single objects can be classified into one class; 4. Classification: Ensemble classification methods are used to combine multiple classifiers. Two separate Random Forest ensembles are created based on the shadow index (one for the shadow-covered area and one for the shadow-free area). The random forest “Out of Bag” error is used to automatically evaluate features according to their impact, resulting in 45 features selected for the shadow-free and 55 for the shadow-covered part. The outcome of the two ensembles and their uncertainties are synthesized based on the shadow index to create the classification outcome; 5. Post-processing: A second Markov Random Field-based approach is used to improve the classification outcome based on the neighborhood of pixels and the class uncertainties obtained from the previous step. Furthermore, object detectors are used to perform object-based identification and classification for railways and highways (based on the Hough transform) as well as buildings (based on Lidar features).

Overall Accuracy: 94.43

Kappa Statistic: 0.9396

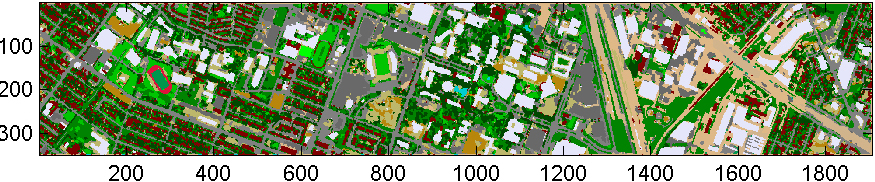

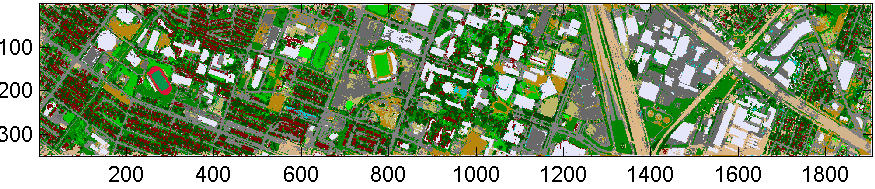

2’nd Place

Authors: Bei Zhao, Yanfei Zhong, Ji Zhao, and Liangpei Zhang

Affiliations: State Key Laboratory of Information Engineering in Surveying, Mapping, and Remote Sensing (LIESMARS), Wuhan university, P. R. China

Contact Email: zhongyanfei@whu.edu.cn

Algorithm Description:

The main procedure of the classification is described as follows:

(1) Feature extraction: Minimum noise fraction (MNF) and principal component analysis (PCA) have been utilized to reduce the dimension of the spectral features, respectively, which can decrease the computational complexity during the classification. 22 features containing the most information of the hyperspectral image are kept for both MNF and PCA. Meanwhile, the NDVI has been calculated to highlight the vegetation.

The first three principal components acquired by PCA are employed to calculated gray-level co-occurrence matrix (GLCM) with 9 window size. The homogeneity measure is used to obtain the texture information of image.

(2) Classification with classifiers trained by all class samples: The 22 spectral features obtained by MNF (PCA), 1 NDVI feature, 3 GLCM texture features, and 1 DSM feature are stacked into a 27 feature vector. Three classifiers are employed, namely maximum likelihood classifier (MLC), support vector machine (SVM) and multinomial logistic regression (MLR). After this step, 6 classification maps are obtained by three classifiers with MNF+NDVI+GLCM+DSM features, and PCA+NDVI+GLCM+DSM features, respectively.

(3) Classification combined decision knowledge with classifiers trained by partial class samples: In this step, DSM data is utilized to generate ground points and non-ground points by terrain filter, specifically the Axelsson filter. In the binary class, the non-ground class contains some viaduct class points, while the ground class contains some building points. The ground and non-ground class points are revised by mean shift segmentation on the DSM image for the gradually changing characteristic of viaduct in elevation.

By comparing the binary class before and after revising, a class changing map can be gotten, which some viaduct pixels can be recognized based on by applying the opening morphological operator. These viaduct pixels can be used as post-processing of highway class. Then 15 classes are divided into two group: ground group containing healthy grass, stressed grass, synthetic grass, soil, water, road, highway, railway, parking lot 1, parking lot 2, tennis court and running track; and non-ground group including tree residential, commercial, parking lot 1, and parking lot 2. The classifier such as MLC, SVM, or MLR is employed to classify the two groups separately using 27 features mentioned in the (2). The finally classification map can be acquired by merging the classification maps of the two groups. 6 classification maps can also be gotten in this step.

(4) Fusing the classification maps and post-classification: A integrated classification map is generated from the 12 classification maps in (2) and (3) by majority voting. Using the mean shift segmentation result, non-ground class in (2), tree class of the integrated map, the segments of buildings can be obtained, which can be utilized to revise the integrated classification map for residential, commercial, parking lot 1, and parking lot 2. After building and highway (mentioned in (2)) revising, a smoothing post-processing is employed to generate the final classification map.

Overall Accuracy: 93.32

Kappa Statistic: 0.9275

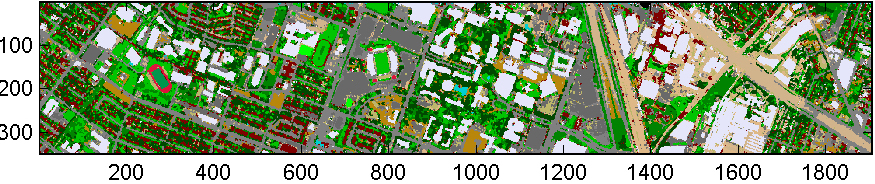

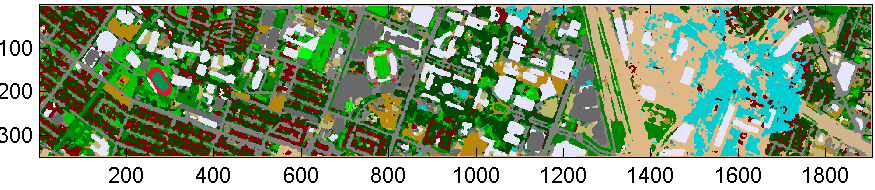

3’d Place

Authors: Janja Avbelj [1,2], Jakub Bieniarz [1], Daniele Cerra [1], Aliaksei Makarau [1], Rupert Müller [1]

Affiliations:

[1] German Aerospace Center (DLR), Earth Observation Center, Remote Sensing Technology Institute, Oberpfaffenhofen, 82234 Wessling, Germany.

[2] Technische Universität München, Chair of Remote Sensing Technology, Arcisstr. 21, 80333 Munich, Germany.

Contact Email: daniele.cerra@dlr.de

Algorithm Description:

We carried out the classification using a 2-layer neural network. The input features have been selected to exploit spectral, textural, and height information. We extracted spectral features through sparse unmixing, also using derivative features, and results of projection on the feature space spanned by the prototype vectors related to the training samples, with a regularization step carried out directly in the feature space based on synergetics theory. The dimensionality of the described feature space has been reduced through Minimum Noise Fraction. We then selected Gabor features as texture descriptors, and derived above-ground height information from the LiDAR DSM. The DSM was first normalized by subtracting from it the ground height, computed as the set of smaller local maxima in a sliding window analysis. Further preprocessing steps included compensation of the shaded area in the image and statistical analysis of the training samples. We carried out the former by creating a mask for the affected area and fitting a linear correction function to the ratios between similar materials inside and outside of the shade. The latter consisted of two steps: a derivation of 31 homogeneous subclasses from the original (heterogeneous) 15 classes, and an automatic statistical testing to remove outliers for each of the 31 subclasses. Furthermore, seven noisy bands have been discarded from the dataset. The classification has been derived by fusing the outputs of two AdaBoosted 2-layer neural networks, which employed a Kalman filter for the training step. We refined the classification results by morphological filtering (two openings and closings). Finally, an object-based regularization of commercial areas was carried out based on the detected above-ground objects from the normalized DSM and on spectral information.

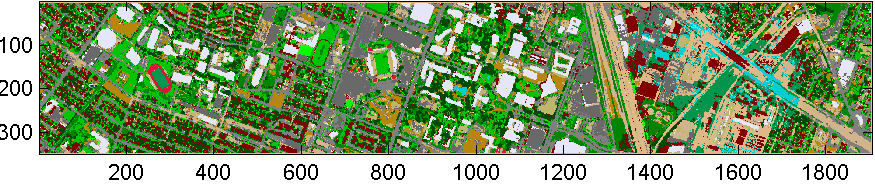

Classification Map:

Overall Accuracy: 93.20

Kappa Statistic: 0.9263

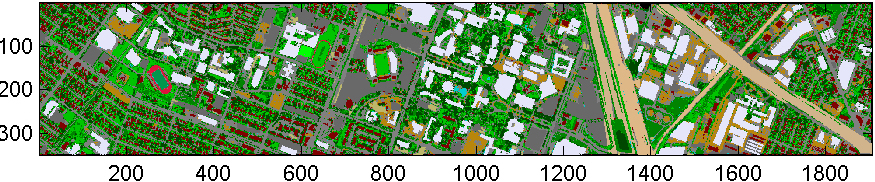

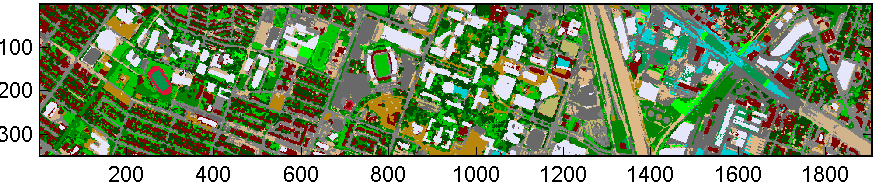

4’th Place

Authors: Doron Bar and Svetlana Raboy

Affiliations: Department of Mathematics, Israel Institute of Technology, Israel

Contact Email: doron.e.bar@gmail.com

Algorithm Description:

Algorithm Description: Classification results for the 2013 Data Fusion Contest of Geoscience and Remote Sensing Society are presented. In the contest we were asked to classify an urban area into 15 different classes. A hyperspectral image and a LiDAR height map were given. Our method is composed of the following three main stages: Preprocess, Spectral Classification and Spatial Classification. Preprocess: We started our process by preprocessing both spectral and height map. The spectral map is partly shaded by a few small clouds. Using a novel method the shades are removed from the hyperspectral cube. The height map was changed so that the zero level will matches the ground level. Spectral Classification: Various spectral indices were used. Some are well know, such as Normalized Difference Vegetation Index, Water Index and the Soil Index. Some are less well known, such as Vegetation in Stress or the Road Index. At this stage the processed LiDAR were used to extract the buildings and to classify them, and to separate trees from grass. The buildings were classified at this stage to residential, commercial or parking lot. Assuming that all parking lot buildings enable parking on the roof, this classification was by detecting the ramp to the roof. Spatial Classification: The Road index brings out 5 main classes: roads, highways, railways, and parking lots 1 and 2. In order to separate between these classes we used spatial features such as, long and narrow, long and partially straight, long and wide. The running tracks were also been detected at this stage, according to their special form: round and big. Due to shortage of time, detection of Parking Lot 2 was postponed to further research. Those three stages were used to produce the classification map for the 2013 IEEE GRSS Data Fusion Contest. Some mismatch between the height map and the spectral image caused some misses in our results. The results were produced by a fully automatic algorithm.

Classification Map:

Kappa Statistic: 0.9177

5’th Place

Authors: Naoto Yokoya*, Tomohiro Matsuki**, Shinji Nakazawa**, and Akira Iwasaki*

Affiliations: * Research Center for Advanced Science and Technology (RCAST), the University of Tokyo, Japan; ** Department of Aeronautics and Astronautics, the University of Tokyo, Japan

Contact Email: yokoya@sal.rcast.u-tokto.ac.jp

Algorithm Description:

As a pre-processing, the authors modified shadows in the hyperspectral data and terrain effects in the LiDAR derived DSM data. Shadows caused by clouds in the hyperspectral data were detected using thresholding of illumination distributions calculated by the spectra. Relatively small structures in the thresholded illumination map were removed based on the assumption that cloud shadows are larger than structures on the ground. Since bright buildings in cloud shows cannot be detected as shading area, all large buildings were detected by spatial labeling using the DSM data and NDVI obtained from the hyperspectral data. Buildings surrounded by shadows were recognized as candidates of shading areas. Unmixing was applied to buildings partially surrounded by shadows to estimate shadow degree map on buildings. Shadows caused by buildings were computed by line-of-sight analysis, using the DSM data and the position of the sun at the time of the hyperspectral data acquisition. The final shadow map was obtained by combining all detected shading areas, and shadow effects are corrected using shading coefficient derived from the comparison of shading and non-shading areas with the same materials, e.g., partially shadowed buildings. The differential image of the DSM data was used to identify the effective height values reducing terrain effects, which can contribute to accurate classification. After the pre-processing, the nearest neighbor (NN) algorithm was applied to a fused feature of spectral angle and difference of height. Two features were normalized and fused using a weighted summation determined by cross-validation. Large buildings estimated in the shadow detection process were classified by a weighted NN algorithm, which uses a prior information of training data that many of large buildings belong to limited classes. The final classification result was obtained after applying an iterative high-dimensional bilateral filtering to the NN classification map to reduce noise. The class label at each pixel in the image was replaced by a weighted voting of class labels from nearby pixels in a window. This weight is based on a Gaussian distribution, which depends both on 3D-spatial Euclidean distance and on the radiometric differences calculated from spectra. The iterative high-dimensional bilateral filer with a small window works as an edge-preserving and noise reducing smoothing filter for the classification map.

Classification Map:

Overall Accuracy: 90.65

Kappa Statistic: 0.8985

6’th Place

Authors: Wenzhi Liao, Rik Bellens, Aleksandra Pizurica, Sidharta Gautama, Wilfried Philips

Affiliations: TELIN-IPI-iMinds, Ghent University

Contact Email: wenzhi.liao@telin.ugent.be

Algorithm Description: Automatic interpretation of remote sensed images remains challenging. Nowadays, we have very diverse sensor technologies and image processing algorithms that allow to measure different aspects of objects on the earth (spectral characteristics in hyperspectral images, height in LiDAR data, geometry in image processing technologies like Morphological Profiles). It is clear that no single technology can be sufficient for a reliable classification, but combining many of them can lead to problems like the curse of dimensionality, excessive computation time and so on. Applying feature reduction techniques on all the features together is not a good idea, because this does not take into account the differences in structure of the feature spaces. Decision fusion on the other hand has difficulties with modeling correlations between the different data sources. We propose a graph-based fusion method to couple dimensionality reduction and feature fusion of the spectral information (of original hyperspectral image) and the features extracted by morphological profiles computed on both hyperspectral and LiDAR data together. We first build a fusion graph where only the feature points with similar spectral, spatial and elevation characteristics are connected. Then, we solve the problem of multi-sensor data fusion by projecting the features onto a linear subspace, such that neighboring data points (i.e. with similar spectral, spatial and elevation characteristics) in the high-dimensional feature space are kept as neighbors in the low-dimensional projection subspace as well.

Classification Map:

Overall Accuracy: 90.30

Kappa Statistic: 0.8948

7’th Place

Authors: Giona Matasci¹, Michele Volpi¹ and Devis Tuia²

Affiliations: 1. Center for Research on Terrestrial Environment (CRET), University of Lausanne, Switzerland. 2. Laboratory of Geographic Information Systems (LaSIG), Ecole Polytechnique Fédérale de Lausanne, Switzerland.

Contact Email: giona.matasci@unil.ch

Algorithm Description: We applied the recently proposed active set algorithm on the hyperspectral and the LiDAR datasets. Starting from this initial set of variables, the algorithm proceeds with a forward feature selection among randomly generated spatial features (morphological and texture filters) from both sources (from PCA transformations of the hyperspectral image and from the raw LiDAR height map) and selects those which are the most promising, without a priori knowledge on the filterbank. The candidate filters are generated within the (possibily infinite) space of filter parameters (filter types, scales, orientations, etc.). The most useful feature is then selected based on the optimality conditions of a linear SVM. If these conditions are violated, this means that the SVM error will decrease if the feature is added to the current active set. This allows the automatic selection of the optimal features for the classification task at hand, similarly to what is done with pixels in active learning algorithms. This way, the classifier and the relevant features are learned at the same time. The obtained maps have been fused by majority voting with those produced by a SVM classifier with a Gaussian kernel. Such a model has been trained with the same type of spatial filters complemented with spectral indices (NDVI on different band combinations) chosen by expert knowledge. The maps that have been merged are the output of classification schemes exclusively based on training sets constituted by labeled pixels drawn from the available ground truth. Finally, a spatial majority vote with a 5×5 moving window has been carried to reduce the noise of the final classification map.

Classification Map:

Overall Accuracy: 89.92

Kappa Statistic: 0.8909

8’th Place

Authors: Hankui Zhang, Bo Huang

Affiliations: Department of Geography and Resource Management The Chinese University of Hong Kong Shatin, N.T., Hong Kong

Contact Email: zhanghankui@gmail.com

Algorithm Description: In this classification algorithm, we have integrated the spectral and spatial information and a prior knowledge by using the minimum noise fraction (MNF), support vector machine (SVM) and Markov random field (MRF) techniques. The hyperspectral images are first transformed into the feature space by using minimum noise fraction. The texture information from the LiDAR image is also extracted, which are then stacked with the selected MNF bands and fed into the non-parameter SVM classifier. Some a prior knowledge is then incorporated with the MRF. MNF, an improved version of PCA, is a subspace extraction technique in terms of image quality (signal-to-noise ratio). In previous comparisons of the feature selection/extraction techniques for the SVM classifier, MNF is proved to be one of the best without given the specific reason. In fact, for a non-parameter classifier the Hughes phenomenon may be caused the noise of the observed signal. Thus MNF can perform best as it can suppress the noise during the feature extraction. We split the training samples into two parts. One part has 100 samples in each class for training and the left samples are used for validation. We found that the first 19 MNF features could give us the best accuracy, which are then stacked with the texture features of the LiDAR bands to form the input classification vectors. After setting the input features, the MRF is utilized to incorporate prior knowledge, especially the shape or geometric pattern of the land cover object. We found that a railway of a highway should be endless unless the image edge or the cloud shadow is encountered. A tennis court, parking lot (including parking lot 1 and parking lot 2) or a running track should not be a small islet with less than 20 pixels around. These cover types are minority in the image and are likely to have false classifications by misclassifying the majority classes like buildings. Thus the knowledge is used to filter out the misclassified pixels, which is implemented in combination with a common used spatial MRF technique to get the final classification result.

Classification Map:

Overall Accuracy: 87.39

Kappa Statistic: 0.8631

9’th Place

Authors: Yin Zhou, Ana Remirez, Luisa Polania, Sherin Mathews, Gonzalo Arce, Kenneth Barner

Affiliations: University of Delaware

Contact Email: zhouyin@udel.edu

Algorithm Description: A novel discriminative dictionary learning algorithm is proposed for Hyperspectral and LiDAR image classification. Two Extended Morphological Profiles (EMP) are extracted for each pixel over the Hyperspectral image cube and the LiDAR image respectively. The final feature per pixel is obtained by projecting the two EMPs into two separate low-dimensional feature space via Linear Discriminant Analysis. Given that observable objects from the outer space are approximately homogeneously textured in the Hyperspectral image cube and consistently characterized in terms of elevation by LiDAR image. Nearby pixels are more likely to be associated to the same class label as the target pixel. That is the discrimination information resides in the local neighborhood of each pixel. Therefore, we propose a novel discriminative dictionary learning algorithm by extending our recent work – the Locality-Constrained Dictionary Learning. Specifically, we suppose the feature of every pixel resides on a hidden intrinsic nonlinear manifold, which typically is of much lower dimension than the actually captured data in observation space. We have shown in theory that the approximation to an unobservable intrinsic manifold by a few latent landmark points residing on the manifold can be cast in a dictionary learning problem over the observation space. By a incorporating the classification error penalty term to form a unified object function, the dictionary and the classifier are jointly trained using training features provided. The algorithm converges quickly, typically within 15 iterations. The testing data is classified by first computing the locally linear reconstruction code over the dictionary and then the code is mapped to a label vector via the classifier. Classification of high-resolution satellite image is a challenging problem as an image may contain almost a million pixels with tens or hundreds of feature dimension. Despite their great success, traditional classification algorithms, e.g., SVM, is of cubic complexity in the number of training samples, which requires exorbitant computational and memory usage. Thus it might not meet the practical need. However, the newly proposed algorithm is very efficient, approximately 3 minutes for the entire 349-by-1905 image in the MATLAB environment.

Classification Map:

Overall Accuracy: 86.15

Kappa Statistic: 0.8501

10’th Place

Authors: Stefan Uhlmann*, Serkan Kiranyaz*, Alper Yildirm**

Affiliations: *Tampere University of Technology, Tampere, Finland ** TUBITAK, Ankara, Turkey

Contact Email: stefan.uhlmann@tut.fi

Algorithm Description:

Our approach is divided into 2 steps; firstly generating additional features and secondly using a simple semi-supervised learning and ensemble classification approach. Features: Based on the original 144 Hyperspectral (HS) bands, we generated the following additional feature vectors (FV): FV1: We computed the Normalized Difference Vegetation Index (NDVI) and Enhanced Vegetation Index (EVI) per pixel. FV2: We computed Ratio transformations (as done for multispectral data) of various bands to emphasis differences between surface materials such as vegetation and man-made areas. We also generated a natural color composite image, which was further contrast enhanced. Based on this image we extracted two texture features FV3: Local Binary Pattern (LBP) within in a 3×3 pixel neighborhood FV4: Gabor Wavelet with 8 scales and 6 orientations. However, only using the 8th scale as the others are too coarse for pixel-based classification. And we further incorporated color information per pixel in form of – the Hue + Saturation of the HSV color space, and Cb + Cr as the blue-difference and red-difference chroma components of the YCbCr color space, respectively (FV5). Classification: For classification, we consider the combination of HS bands + Lidar as a single feature set (FS1). Further, we combined each of the single FVn with the original HS bands and Lidar data into 5 additional feature sets. Each of these feature sets is classified by a pairwise SVM with polynomial kernel of degree 3 where values for the parameters C and gamma have been optimized by grid search. FS1 = HS bands + Lidar FS2 = FS1 + FV1 (NDVI, EVI) FS3 = FS1 + FV2 (ratio transformations) FS4 = FS1 + FV3 (LBP) FS5 = FS1 + FV4 (Gabor) FS6 = FS1 + FV5 (Color components) Additionally, we applied one round of semi-supervised learning to increase the initial training set to (possibly) improve generalization by selecting pixels from each class within a confidence range of 80% and 90%. This new increased training data (varying between 10k-12k depending on the feature set) is used to do a final training over each of the 6 feature sets. A classification map is generated by majority vote over the individual classification outputs of the 6 feature sets. The final classification results is obtained by post processing to eliminate single pixel misclassifications compared to its neighborhood exploiting the local smoothness or consistency constraint that nearby points are likely to have the same label. We have not done any shadow removal nor unclassified pixel detection.

Classification Map:

Overall Accuracy: 85.96

Kappa Statistic: 0.8480